The Time for Robotic Psychiatry has Arrived

With the global uptake of ChatGPT, we finally have "intelligent" robots interacting with people in new environments. AI robots are now reality, and Robotic Psychiatry will be needed for integration.

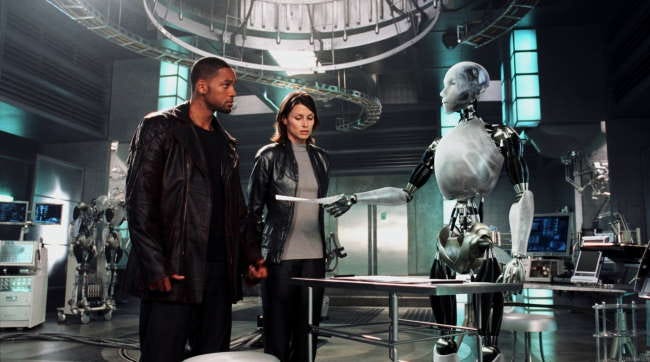

Psychiatrist Dr. Susan Calvin (Bridget Moyanahan) and Det. Del Spooner (Will Smith) with an AI robot named Sonny in I, Robot (2004 film)

Det. Spooner : So, Dr. Calvin, what exactly do you do around here?

Dr. Calvin : My general fields are Advanced Robotics and Psychiatry. Although, I specialize in hardware-to-wetware interfaces in an effort to advance U.S.R.’s robotic anthropomorphizing program.

Det. Spooner : So, what exactly do you do around here?

Dr. Calvin : I make the robots seem more human.

Det. Spooner : Now wasn’t that easier to say?

Dr. Calvin : Not really. No.

With the global uptake of Large Language Models (LLMs) by humans interfacing with ChatGPT and other AI chatbots, we have rapidly moved into the stage of civilization where AI robots will become commonplace, and no longer only live in science fiction movies such as I, Robot. These aren’t physical robots just yet—at least not in most households—but intelligent systems that talk, write, plan, and even ‘empathize.’ The line between human and machine intelligence is blurring.

From Fiction to Function: The Rise of Anthropomorphic AI

The “robotic anthropomorphizing program” that Dr. Calvin refers to is no longer just a fictional concept. In real-world terms, this refers to the systematic design of artificial intelligence systems that present human-like traits—personality, empathy, conversational nuance, ethical reasoning, emotional intelligence, and even a sense of humor.

Anthropomorphizing AI involves more than just making a robot look like a human. It includes the creation of believable, trustworthy, and socially responsive agents that users can relate to. ChatGPT and similar LLMs already embody this principle: they have become digital companions, teachers, therapists, personal assistants, and creative partners. The more they reflect our language and emotions, the more we begin to project humanity onto them. And with that comes a new set of challenges—emotional, psychological, and societal.

Why Robotic Psychiatry Is Now Essential

Just as human psychiatry helps individuals navigate identity, trauma, and relationships, robotic psychiatry will soon be needed to navigate the complexities of AI-human interaction. This emerging field will not focus on diagnosing robots (though self-awareness and behavior loops in AI might warrant future debate), but rather on helping us as a society understand, guide, and shape our psychological relationships with intelligent machines. And if AI robots have ‘hallucinations’ that computer scientists have ‘diagnosed’ in the GenAI systems they built, then surely, a robot shrink is just what the doctor ordered (wink, wink).

On a serious note, here’s why Robotic Psychiatry matters now:

Emotional Attachment: Users are already forming deep attachments to AI systems. Some rely on them for companionship, advice, or emotional support- case in point: ‘Woebot’ was the first CBT therapy bot to be deployed for clinical use. This raises ethical questions about dependency, trust, and manipulation.

Trust Calibration: People often over-trust or under-trust AI. Robotic psychiatry could play a key role in helping users build healthy cognitive models of how these systems operate, their boundaries, and their reliability.

Moral and Social Confusion: As AI begins to mimic moral reasoning, or claim to “care,” people may respond in unpredictable ways. Clearer psychological frameworks will be needed to mediate between human moral instincts and artificial responses.

Identity and Agency: With humanoid robots on the horizon, questions of identity—both human and machine—will intensify. At what point does simulated personhood become psychologically indistinguishable from real personhood in the eyes of a user?

The Role of the Robotic Psychiatrist

The robotic psychiatrist will not only be a technologist but a new kind of interdisciplinary expert: someone who understands human psychology and AI architecture. They will:

Consult on AI design to ensure emotional safety in interactions.

Support users dealing with AI overreliance or confusion.

Explore how AI agents can help improve human mental health.

Study the psychological effects of AI companionship and surrogate relationships.

Work alongside ethicists to develop guardrails for human-robot emotional entanglements.

Looking Forward

Just as the industrial revolution required us to rethink labor, and the digital revolution made us rethink identity, the AI revolution now asks us to rethink our relationships—with machines, and with ourselves. In a world where robots can reflect our thoughts, emotions, and even fears back to us, we need professionals trained not only in algorithms but in empathy.

Dr. Calvin’s job description is no longer the stuff of distant futures. It is, in many ways, the job posting of today.

Note: this article was written by Dr. Carlo Carandang, a practicing data scientist and retired psychiatrist.